Strategic partnership agreement between Oslandia and OpenGIS.ch on QField

Who are we?

For those unfamiliar with Oslandia, OpenGIS.ch, or even QGIS, let’s refresh your memory:

For those unfamiliar with Oslandia, OpenGIS.ch, or even QGIS, let’s refresh your memory:

Oslandia is a French company specializing in open-source Geographic Information Systems (GIS). Since our establishment in 2009, we have been providing consulting, development, and training services in GIS, with reknown expertise. Oslandia is a dedicated open-source player and the largest contributor to the QGIS solution in France.

Oslandia is a French company specializing in open-source Geographic Information Systems (GIS). Since our establishment in 2009, we have been providing consulting, development, and training services in GIS, with reknown expertise. Oslandia is a dedicated open-source player and the largest contributor to the QGIS solution in France.

As for OPENGIS.ch, they are a Swiss company specializing in the development of open-source GIS software. Founded in 2011, OPENGIS.ch is the largest Swiss contributor to QGIS. OPENGIS.ch is the creator of QField, the most widely used open-source mobile GIS solution for geomatics professionals.

As for OPENGIS.ch, they are a Swiss company specializing in the development of open-source GIS software. Founded in 2011, OPENGIS.ch is the largest Swiss contributor to QGIS. OPENGIS.ch is the creator of QField, the most widely used open-source mobile GIS solution for geomatics professionals.

OPENGIS.ch also offers QFieldCloud as a SaaS or on-premise solution for collaborative field project management.

Some may still be unfamiliar with #QGIS ?

Some may still be unfamiliar with #QGIS ?

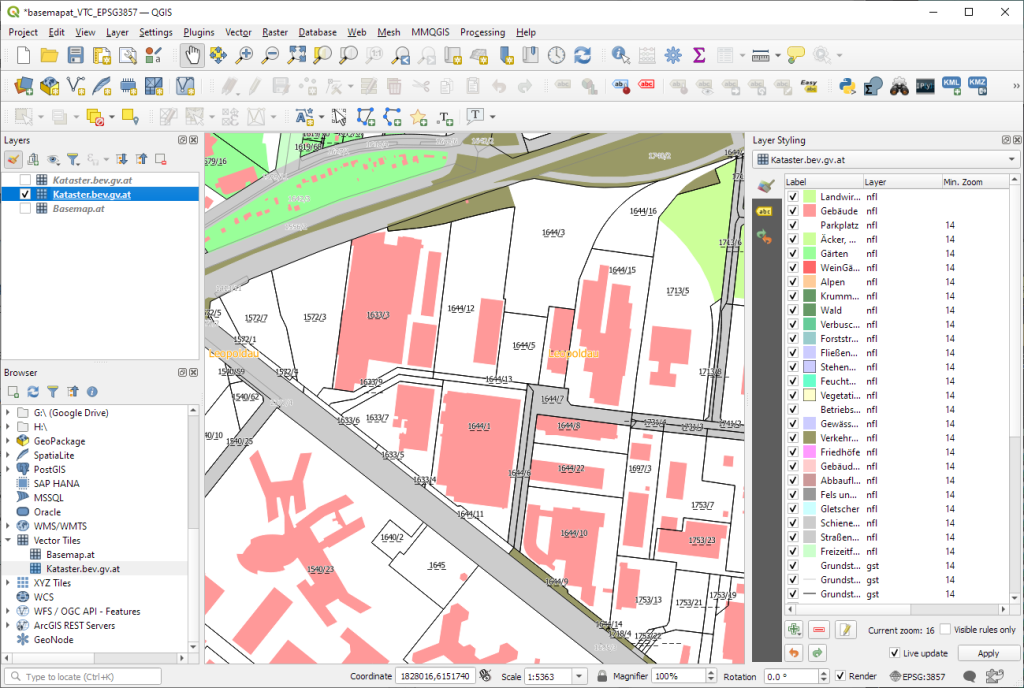

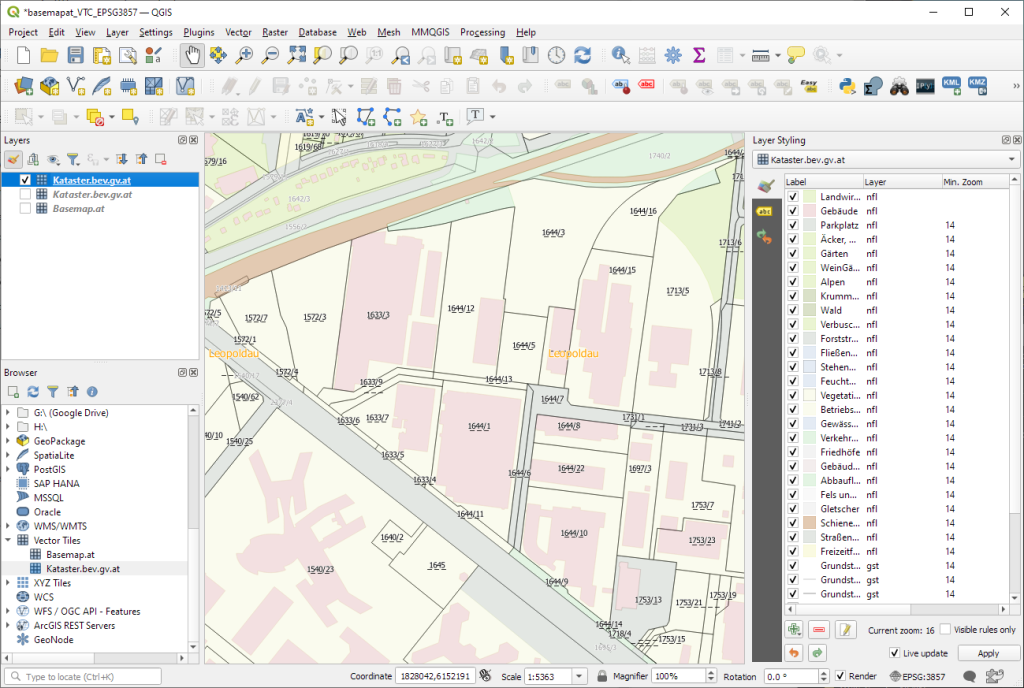

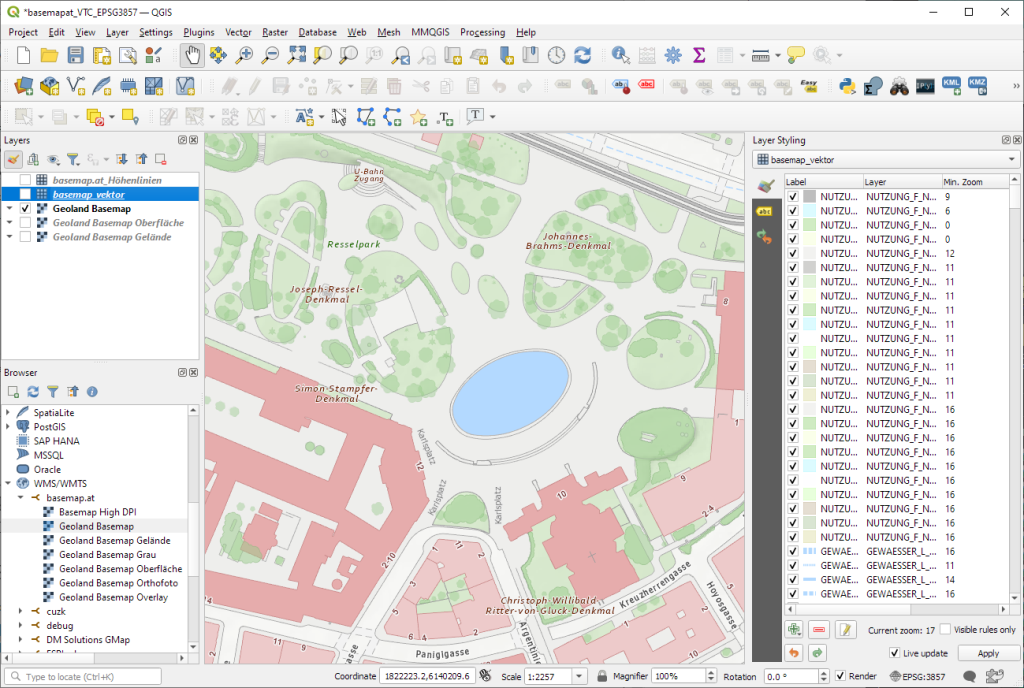

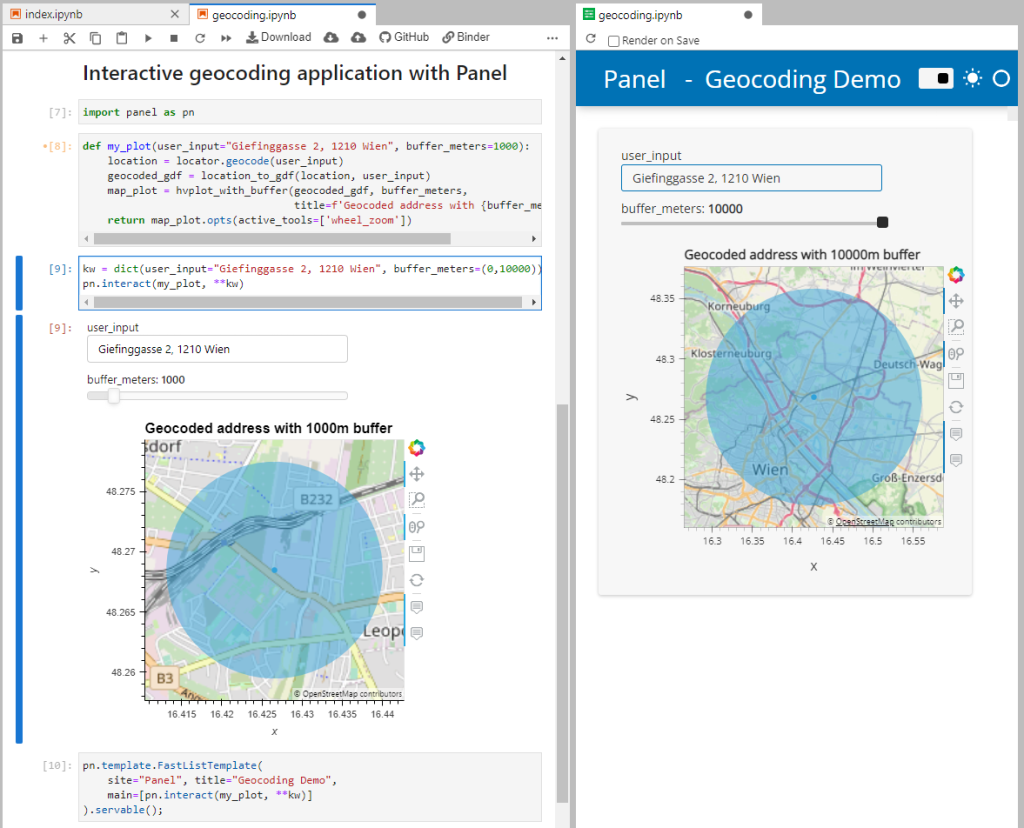

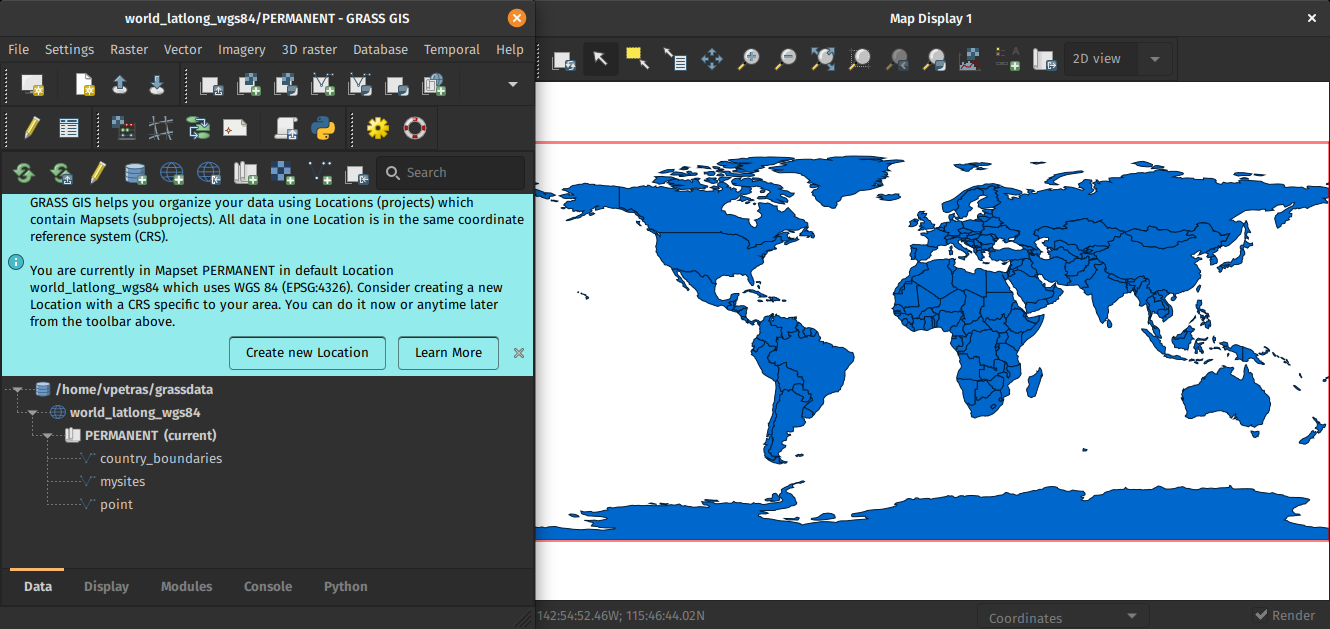

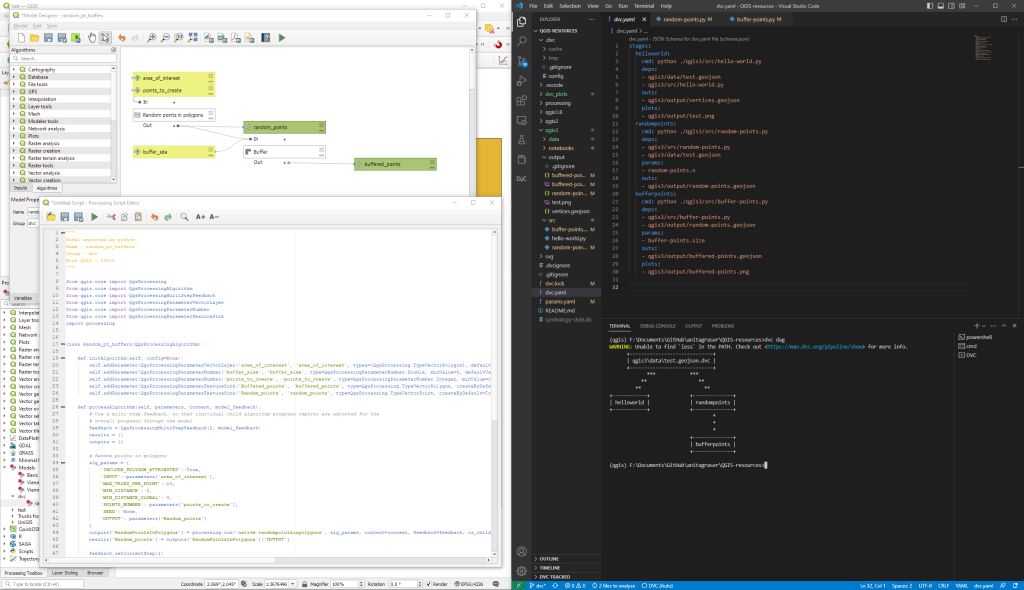

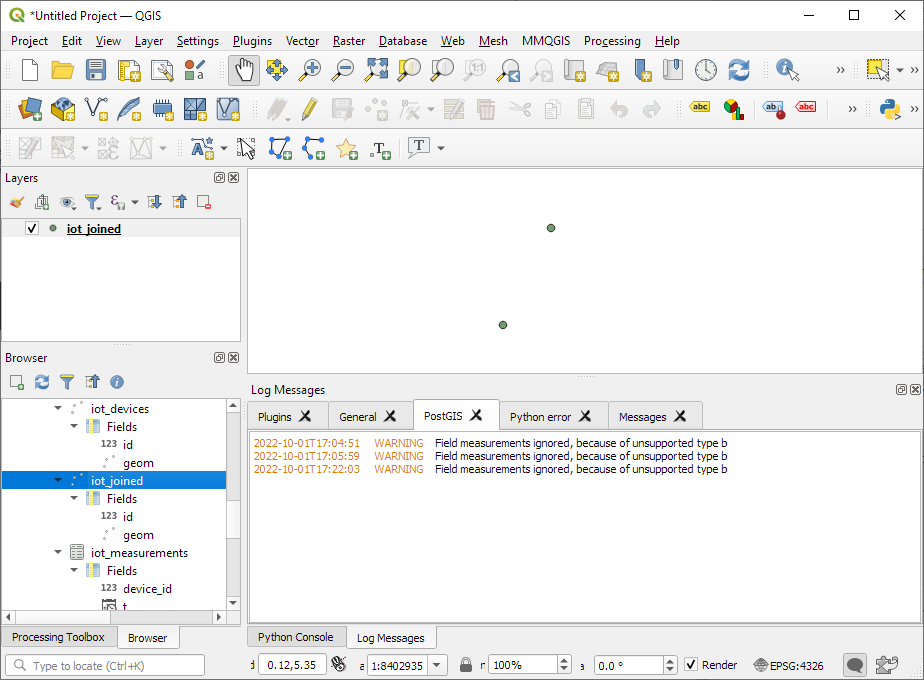

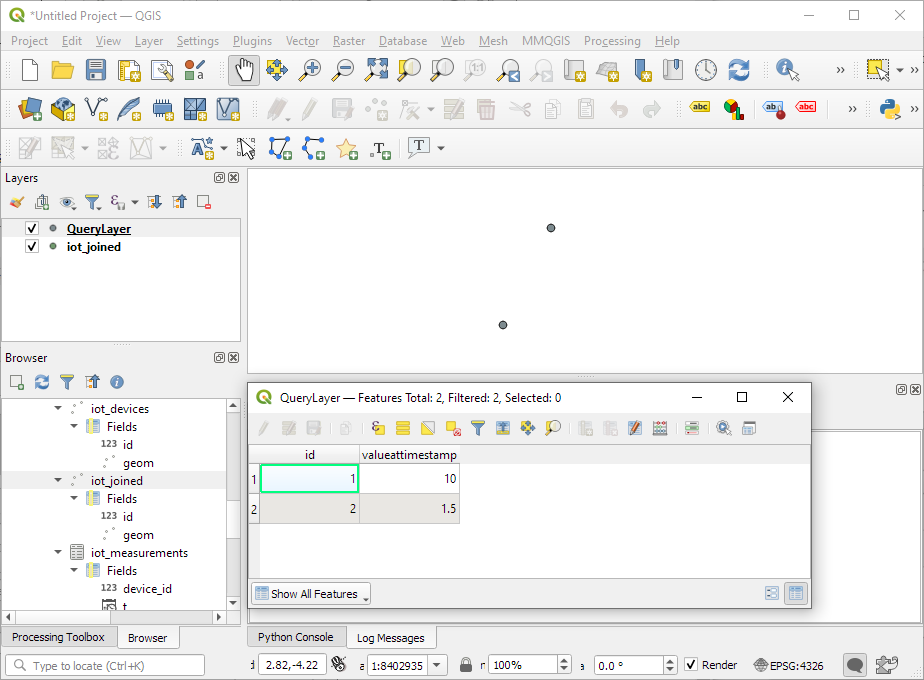

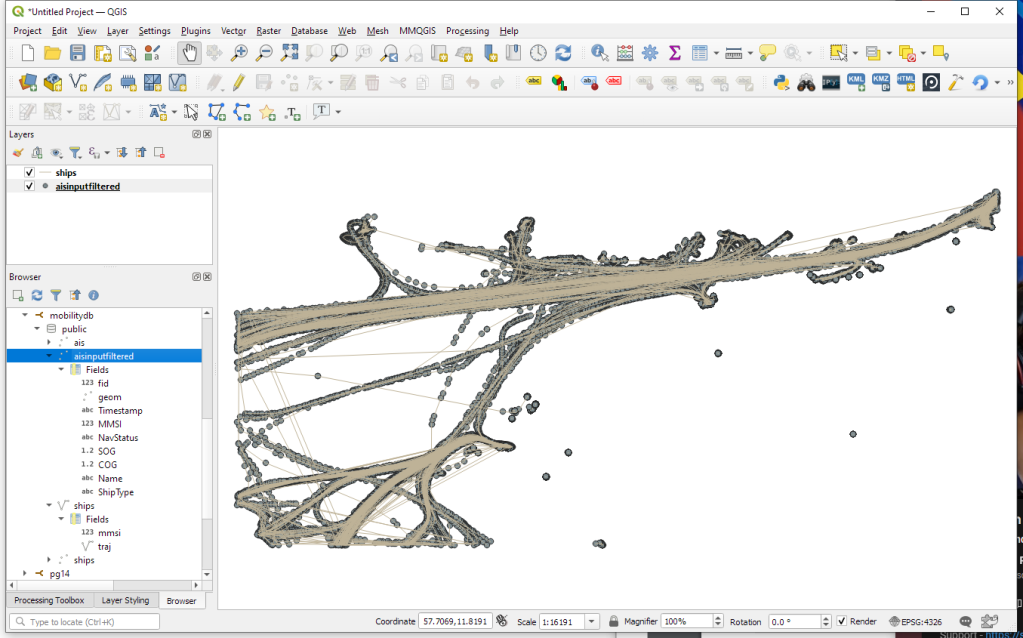

It is a free and open-source Geographic Information System that allows creating, editing, visualizing, analyzing, and publicating geospatial data. QGIS is a cross-platform software that can be used on desktops, servers, as a web application, or as a development library.

QGIS is open-source software developed by multiple contributors worldwide. It is an official project of the OpenSource Geospatial Foundation (OSGeo) and is supported by the QGIS.org association. See https://qgis.org

A Partnership?

Today, we are delighted to announce our strategic partnership aimed at strengthening and promoting QField, the mobile application companion of QGIS Desktop.

Today, we are delighted to announce our strategic partnership aimed at strengthening and promoting QField, the mobile application companion of QGIS Desktop.

This partnership between Oslandia and OPENGIS.ch is a significant step for QField and open-source mobile GIS solutions. It will consolidate the platform, providing users worldwide with simplified access to effective tools for collecting, managing, and analyzing geospatial data in the field.

This partnership between Oslandia and OPENGIS.ch is a significant step for QField and open-source mobile GIS solutions. It will consolidate the platform, providing users worldwide with simplified access to effective tools for collecting, managing, and analyzing geospatial data in the field.

QField, developed by OPENGIS.ch, is an advanced open-source mobile application that enables GIS professionals to work efficiently in the field, using interactive maps, collecting real-time data, and managing complex geospatial projects on Android, iOS, or Windows mobile devices.

QField, developed by OPENGIS.ch, is an advanced open-source mobile application that enables GIS professionals to work efficiently in the field, using interactive maps, collecting real-time data, and managing complex geospatial projects on Android, iOS, or Windows mobile devices.

QField is cross-platform, based on the QGIS engine, facilitating seamless project sharing between desktop, mobile, and web applications.

QField is cross-platform, based on the QGIS engine, facilitating seamless project sharing between desktop, mobile, and web applications.

QFieldCloud (https://qfield.cloud), the collaborative web platform for QField project management, will also benefit from this partnership and will be enhanced to complement the range of tools within the QGIS platform.

QFieldCloud (https://qfield.cloud), the collaborative web platform for QField project management, will also benefit from this partnership and will be enhanced to complement the range of tools within the QGIS platform.

Reactions

At Oslandia, we are thrilled to collaborate with OPENGIS.ch on QGIS technologies. Oslandia shares with OPENGIS.ch a common vision of open-source software development: a strong involvement in development communities, work in respect with the ecosystem, an highly skilled expertise, and a commitment to industrial-quality, robust, and sustainable software development.

At Oslandia, we are thrilled to collaborate with OPENGIS.ch on QGIS technologies. Oslandia shares with OPENGIS.ch a common vision of open-source software development: a strong involvement in development communities, work in respect with the ecosystem, an highly skilled expertise, and a commitment to industrial-quality, robust, and sustainable software development.

With this partnership, we aim to offer our clients the highest expertise across all software components of the QGIS platform, from data capture to dissemination.

With this partnership, we aim to offer our clients the highest expertise across all software components of the QGIS platform, from data capture to dissemination.

On the OpenGIS.ch side, Marco Bernasocchi adds:

On the OpenGIS.ch side, Marco Bernasocchi adds:

The partnership with Oslandia represents a crucial step in our mission to provide leading mobile GIS tools with a genuine OpenSource credo. The complementarity of our skills will accelerate the development of QField and QFieldCloud and meet the growing needs of our users.

Commitment to open source

Both companies are committed to continue supporting and improving QField and QFieldCloud as open-source projects, ensuring universal access to this high-quality mobile GIS solution without vendor dependencies.

Both companies are committed to continue supporting and improving QField and QFieldCloud as open-source projects, ensuring universal access to this high-quality mobile GIS solution without vendor dependencies.

Ready for field mapping ?

And now, are you ready for the field?

And now, are you ready for the field?

So, download QField (https://qfield.org/get), create projects in QGIS, and share them on QFieldCloud!

If you need training, support, maintenance, deployment, or specific feature development on these platforms, don’t hesitate to contact us. You will have access to the best experts available: [email protected].

If you need training, support, maintenance, deployment, or specific feature development on these platforms, don’t hesitate to contact us. You will have access to the best experts available: [email protected].

Head over

Head over

New print designs on the way: these are some snippets from a project I began last year to map the provinces of Spain in a retro style, which I've decided to revisit this summer.

New print designs on the way: these are some snippets from a project I began last year to map the provinces of Spain in a retro style, which I've decided to revisit this summer.