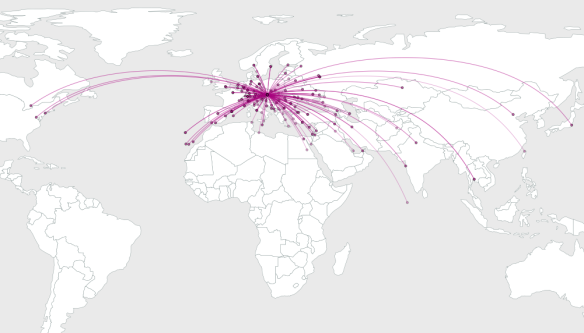

The GeoNames dataset provides a list of global placenames, their

location and some additional information such as population and so on.

It is published under the Creative Commons Attribution 3.0 License,

which allows you to use the data for your own purposes. You can download

the data by country, or the entire global dataset. In this article, I

will walk you though how I downloaded the entire dataset, loaded it into

PostgreSQL and added a geometry column so that I could view it in QGIS.

Note that you can substitute these instructions for a specific country's

data easily.

First up, lets get the data from the geonames download page!

wget -c http://download.geonames.org/export/dump/allCountries.zip

Note the download is around 196mb so if you live in an internet

backwater like I do, expect it to take a little while. If the download

gets disconnected, just rerun the same command again - the '-c' option

tells wget to continue where it left off last time.

Once the data is downloaded, unzip it:

unzip allCountries.zip

You should now have a text file called allCountries.txt weighing in at

just under 900mb. Next we can load it into PostgreSQL using a variation

of this article. I highly recommend the use of schemas to partition

your database into logical units. In the code listings that follow, it

is assumed you have a schema called 'world'. If you need to create it,

simply do:

create schema world;

From the psql prompt. Since I am only interested in the geoname table at

the moment I simply do this in my database.

create table world.geoname (

geonameid int,

name varchar(200),

asciiname varchar(200),

alternatenames varchar(8000),

latitude float,

longitude float,

fclass char(1),

fcode varchar(10),

country varchar(2),

cc2 varchar(60),

admin1 varchar(20),

admin2 varchar(80),

admin3 varchar(20),

admin4 varchar(20),

population bigint,

elevation int,

gtopo30 int,

timezone varchar(40),

moddate date

);

You will notice that I extended the alternatenames field size from the

original tutorial's 4000 characters to 8000 characters in order to

accommodate some longer entries that were causing my imports to fail

with 4000 chars.

Next we can import the data (also from the psql prompt):

copy world.geoname (geonameid,name,asciiname,alternatenames,

latitude,longitude,fclass,fcode,country,cc2,

admin1,admin2,admin3,admin4,population,elevation,gtopo30,

timezone,moddate) from '/home/web/allCountries.txt' null as '';

Once again this is similar to the import line used by the original

article I used, except I have used a full path to my allCountries.txt

file. The import may take a little while depending on the speed of your

computer.

When it is completed, you should have a bunch of data (~7.5 million

records) in your table:

gis=# select count(*) from world.geoname ;

count

---------

7664712

(1 row)

It is going to be useful to have a unique id for every record - QGIS for

one would like to have it, so lets add one (in addition to the geonameid

field):

alter table world.geoname add column id serial not null;

CREATE UNIQUE INDEX idx_geoname_id ON world.geoname (id);

Because I know I will be using some other fields as basis for subset

queries etc., I added some indexes on those too:

CREATE INDEX idx_geoname_population ON world.geoname (population);

CREATE INDEX idx_geoname_fcode ON world.geoname(fcode);

Ok thats all great, but there is no geometry column yet so we can't view

this in QGIS easily. So lets GIS enable the table! First we add a new

geometry column:

alter table world.geoname add column the_geom geometry;

Now we populate the geometry column:

update world.geoname set the_geom = st_makepoint(longitude,latitude);

Next we add a constraint to ensure that the column always contains a

point record or is null

alter table world.geoname add constraint enforce_geotype_the_geom

CHECK (geometrytype(the_geom) = 'POINT'::text OR the_geom IS NULL);

Finally lets index the table on the geometry field:

CREATE INDEX idx_geoname_the_geom ON world.geoname USING gist(the_geom);

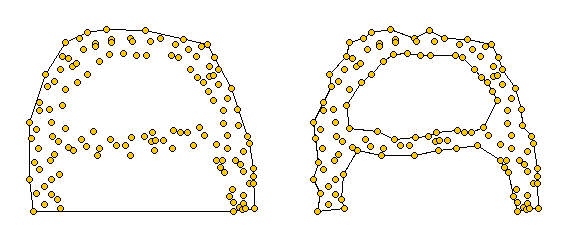

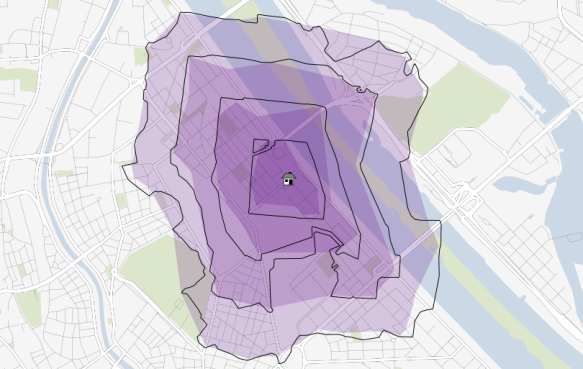

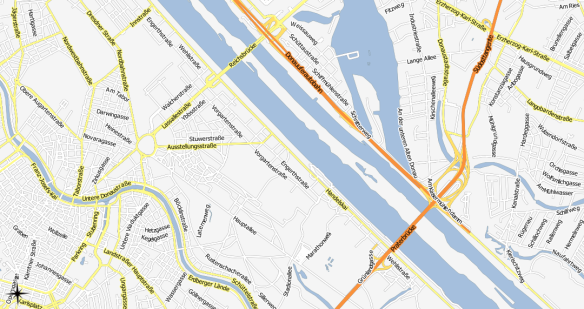

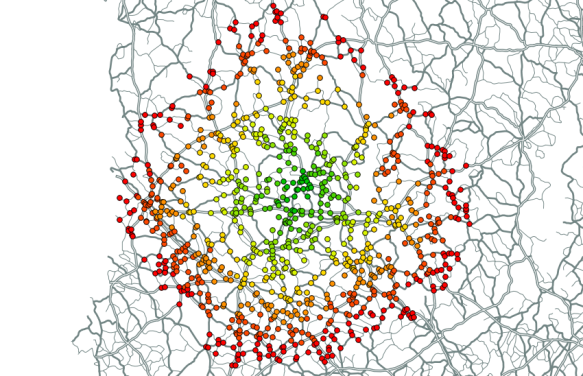

Ok next we can connect to the database using QGIS and view our data!

Note that you may want to filter the data or set up scale dependent

visibility so that QGIS doesn't try to render all 7.5 million points

when you zoom out.

I added a query filter like this to the layer properties -> general tab

-> subset:

"population" >= 10000 AND fcode != 'PPLA' and fcode != 'PCLI' AND fcode != 'ADM1'

You should experiment and read the geonames documentation in order to

define a filter that is useful for your purposes.

The Geonames dataset is a wonderful asset to the GIS community,

particularly to those of us who value Free Software and Free Data.

Update 6 Aprl 2011:

I encountered one issue with the above procedure: the SRID for the

imported points is -1 when loaded instead of 4326. This cause problems

e.g. in mapserver you get an error like this:

ERROR: Operation on two geometries with different SRIDs

To fix the issue I ran the following update query to assign all points a

proper SRID:

update world.geoname set the_geom = ST_SETSRID(the_geom,4326);

Note that it takes quite a while to run. When it completes, you can

verify that the points are all in the correct CRS by running this little

query:

gis=# select distinct ST_SRID(the_geom) from world.geoname;

Which should produce something like this:

st_srid

---------

4326

(1 row)

For good measure, I also added the geoname table to my geometry columns

table:

insert into geometry_columns (f_table_catalog,f_table_schema,f_table_name,f_geometry_column,

coord_dimension,srid,type) values ('','world','geoname','the_geom',2,4326,'POINT');

Lastly I also gave select permissions to my readonly user (which I use

when publishing in a web environment):

grant usage on schema world to readonly;

grant select on world to readonly;

I have also fixed the formatting of some of the SQL snippets above for

those who struggled to read it within the width of this page.