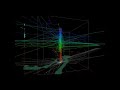

Today’s post is a short tutorial for creating trajectory animations with a fadeout effect using QGIS Time Manager. This is the result we are aiming for:

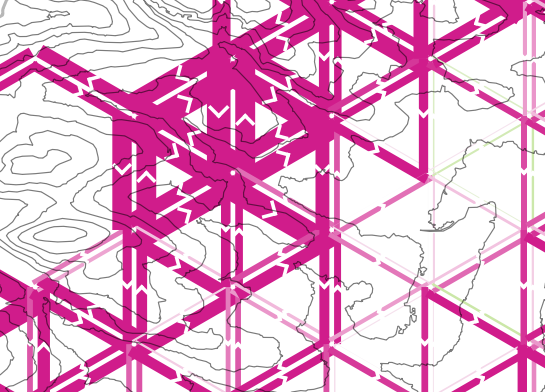

The animation shows the current movement in pink which fades out and leaves behind green traces of the trajectories.

About the data

GeoLife GPS Trajectories were collected within the (Microsoft Research Asia) Geolife project by 182 users in a period of over three years (from April 2007 to August 2012). [1,2,3] The GeoLife GPS Trajectories download contains many text files organized in multiple directories. The data files are basically CSVs with 6 lines of header information. They contain the following fields:

Field 1: Latitude in decimal degrees.

Field 2: Longitude in decimal degrees.

Field 3: All set to 0 for this dataset.

Field 4: Altitude in feet (-777 if not valid).

Field 5: Date – number of days (with fractional part) that have passed since 12/30/1899.

Field 6: Date as a string.

Field 7: Time as a string.

Data prep: PostGIS

Since any kind of GIS operation on text files will be quite inefficient, I decided to load the data into a PostGIS database. This table of millions of GPS points can then be sliced into appropriate chunks for exploration, for example, a day in Beijing:

CREATE MATERIALIZED VIEW geolife.beijing

AS SELECT trajectories.id,

trajectories.t_datetime,

trajectories.t_datetime + interval '1 day' as t_to_datetime,

trajectories.geom,

trajectories.oid

FROM geolife.trajectories

WHERE st_dwithin(trajectories.geom,

st_setsrid(

st_makepoint(116.3974589,

39.9388838),

4326),

0.1)

AND trajectories.t_datetime >= '2008-11-11 00:00:00'

AND trajectories.t_datetime < '2008-11-12 00:00:00'

WITH DATA

Trajectory viz: a fadeout effect for point markers

The idea behind this visualization is to show both the current movement as well as the history of the trajectories. This can be achieved with a fadeout effect which leaves behind traces of past movement while the most recent positions are highlighted to stand out.

Map tiles by Stamen Design, under CC BY 3.0. Data by OpenStreetMap, under ODbL.

This effect can be created using a Single Symbol renderer with a marker symbol with two symbol layers: one layer serves as the highlights layer (pink) while the second layer represents the traces (green) which linger after the highlights disappear. Feature blending is used to achieve the desired effect for overlapping markers.

The highlights layer has two expression-based properties: color and size. The color fades to white and the point size shrinks as the point ages. The age can be computed by comparing the point’s t_datetime timestamp to the Time Manager animation time $animation_datetime.

This expression creates the color fading effect:

color_hsv(

311,

scale_exp(

minute(age($animation_datetime,"t_datetime")),

0,60,

100,0,

0.2

),

90

)

and this expression makes the point size shrink:

scale_exp(

minute(age($animation_datetime,"t_datetime")),

0,60,

24,0,

0.2

)

Outlook

I’m currently preparing this and a couple of other examples for my Time Manager workshop at the upcoming 1st QGIS conference in Nødebo. The workshop materials will be made available online afterwards.

Literature

[1] Yu Zheng, Lizhu Zhang, Xing Xie, Wei-Ying Ma. Mining interesting locations and travel sequences from GPS trajectories. In Proceedings of International conference on World Wild Web (WWW 2009), Madrid Spain. ACM Press: 791-800.

[2] Yu Zheng, Quannan Li, Yukun Chen, Xing Xie, Wei-Ying Ma. Understanding Mobility Based on GPS Data. In Proceedings of ACM conference on Ubiquitous Computing (UbiComp 2008), Seoul, Korea. ACM Press: 312-321.

[3] Yu Zheng, Xing Xie, Wei-Ying Ma, GeoLife: A Collaborative Social Networking Service among User, location and trajectory. Invited paper, in IEEE Data Engineering Bulletin. 33, 2, 2010, pp. 32-40.