Movement data in GIS #3: visualizing massive trajectory datasets

In the fist two parts of the Movement Data in GIS series, I discussed modeling trajectories as LinestringM features in PostGIS to overcome some common issues of movement data in GIS and presented a way to efficiently render speed changes along a trajectory in QGIS without having to split the trajectory into shorter segments.

While visualizing individual trajectories is important, the real challenge is trying to visualize massive trajectory datasets in a way that enables further analysis. The out-of-the-box functionality of GIS is painfully limited. Except for some transparency and heatmap approaches, there is not much that can be done to help interpret “hairballs” of trajectories. Luckily researchers in visual analytics have already put considerable effort into finding solutions for this visualization challenge. The approach I want to talk about today is by Andrienko, N., & Andrienko, G. (2011). Spatial generalization and aggregation of massive movement data. IEEE Transactions on visualization and computer graphics, 17(2), 205-219. and consists of the following main steps:

- Extracting characteristic points from the trajectories

- Grouping the extracted points by spatial proximity

- Computing group centroids and corresponding Voronoi cells

- Deviding trajectories into segments according to the Voronoi cells

- Counting transitions from one cell to another

The authors do a great job at describing the concepts and algorithms, which made it relatively straightforward to implement them in QGIS Processing. So far, I’ve implemented the basic logic but the paper contains further suggestions for improvements. This was also my first pyQGIS project that makes use of the measurement value support in the new geometry engine. The time information stored in the m-values is used to detect stop points, which – together with start, end, and turning points – make up the characteristic points of a trajectory.

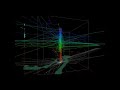

The following animation illustrates the current state of the implementation: First the “hairball” of trajectories is rendered. Then we extract the characteristic points and group them by proximity. The big black dots are the resulting group centroids. From there, I skipped the Voronoi cells and directly counted transitions from “nearest to centroid A” to “nearest to centroid B”.

From thousands of individual trajectories to a generalized representation of overall movement patterns (data credits: GeoLife project, map tiles: Stamen, map data: OSM)

The resulting visualization makes it possible to analyze flow strength as well as directionality. I have deliberately excluded all connections with a count below 10 transitions to reduce visual clutter. The cell size / distance between point groups – and therefore the level-of-detail – is one of the input parameters. In my example, I used a target cell size of approximately 2km. This setting results in connections which follow the major roads outside the city center very well. In the city center, where the road grid is tighter, trajectories on different roads mix and the connections are less clear.

Since trajectories in this dataset are not limited to car trips, it is expected to find additional movement that is not restricted to the road network. This is particularly noticeable in the dense area in the west where many slow trajectories – most likely from walking trips – are located. The paper also covers how to ensure that connections are limited to neighboring cells by densifying the trajectories before computing step 4.

Running the scripts for over 18,000 trajectories requires patience. It would be worth evaluating if the first three steps can be run with only a subsample of the data without impacting the results in a negative way.

One thing I’m not satisfied with yet is the way to specify the target cell size. While it’s possible to measure ellipsoidal distances in meters using QgsDistanceArea (irrespective of the trajectory layer’s CRS), the initial regular grid used in step 2 in order to group the extracted points has to be specified in the trajectory layer’s CRS units – quite likely degrees. Instead, it may be best to transform everything into an equidistant projection before running any calculations.

It’s good to see that PyQGIS enables us to use the information encoded in PostGIS LinestringM features to perform spatio-temporal analysis. However, working with m or z values involves a lot of v2 geometry classes which work slightly differently than their v1 counterparts. It certainly takes some getting used to. This situation might get cleaned up as part of the QGIS 3 API refactoring effort. If you can, please support work on QGIS 3. Now is the time to shape the PyQGIS API for the following years!